Introduction to the Limit for Custom GPTs

Custom GPTs, short for Custom Generative Pre-trained Transformers, are specialized AI models tailored for specific tasks. Unlike general-purpose AI like ChatGPT, these models are designed with a narrow focus, making them ideal for particular industries, businesses, or personal needs.

They help automate repetitive tasks, provide tailored responses, and solve unique problems. From assisting with customer support to generating creative content, Custom GPTs are becoming an integral part of modern solutions.

Understanding the limit for Custom GPTs is essential to harness their full potential. These limitations can include data constraints, technological challenges, or ethical considerations. Being aware of such restrictions ensures effective deployment and helps avoid unrealistic expectations.

Importance of Knowing the Limits

- Performance Optimization: Knowing the limits ensures proper training and setup for accurate outputs.

- Avoid Misuse: Misaligned expectations can lead to misapplications and inefficiencies.

- Compliance and Privacy: Staying within the data and ethical boundaries safeguards user trust.

Key Insights

Here’s an overview of limits for Custom GPTs and their implications:

| Type of Limit | Explanation | Impact |

|---|---|---|

| Data Limitations | Limited by the quality and diversity of the training data. | Poor training data can lead to inaccurate or biased responses. |

| Task-Specific Limits | Designed for specific tasks, not suitable for general use. | Ineffectiveness when used outside the intended domain. |

| Ethical Boundaries | Constraints around generating harmful or biased outputs. | Requires monitoring and filters to avoid misuse. |

| Computational Resources | Dependent on the hardware and software capabilities of the user. | High costs and potential inefficiencies for smaller-scale projects. |

| Knowledge Cutoff | Inability to access real-time data unless explicitly integrated. | Outdated information or inability to respond to dynamic queries. |

By understanding these aspects, users can effectively utilize Custom GPTs while acknowledging their constraints.

Would you like me to expand on these points or suggest solutions for overcoming some of these limits?

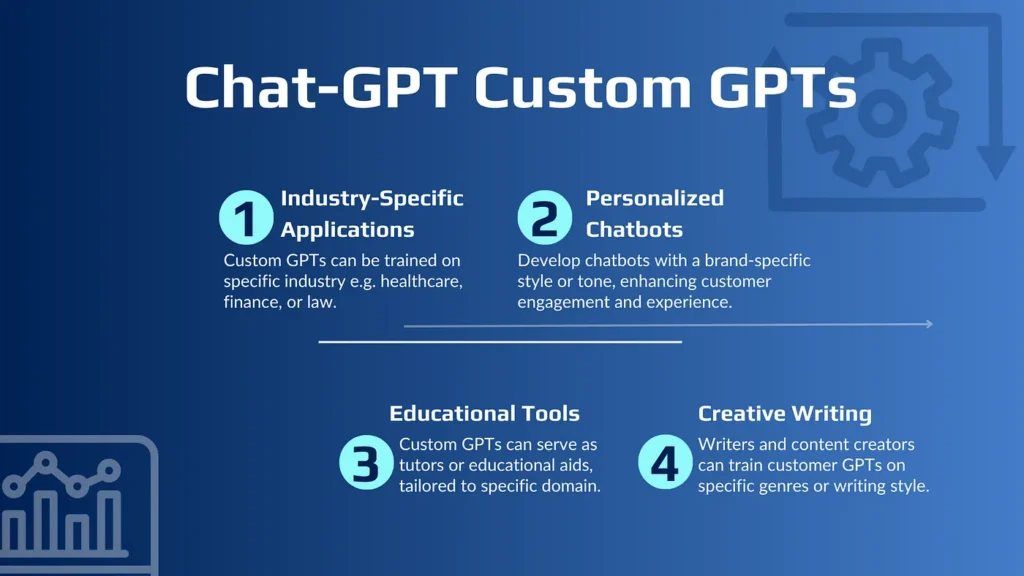

What Are Custom GPTs?

Custom GPTs, or Custom Generative Pre-trained Transformers, are specialized versions of AI language models like OpenAI’s GPT-4.

Unlike standard GPTs, which are designed for broad applications across numerous topics, Custom GPTs are fine-tuned or adapted to meet specific needs, industries, or use cases.

This fine-tuning makes them highly effective for tasks requiring in-depth domain knowledge or specialized functionality.

Features of Custom GPTs:

- Domain Specialization: These models are trained on data from a specific industry or area, such as healthcare, finance, or customer service. This allows them to understand and use field-specific terminology effectively.

- Enhanced Accuracy: Custom GPTs produce more precise and relevant responses compared to generic GPT models, as they are tailored to specific datasets.

- Customized Behavior: Users can adjust these models to reflect a particular tone, style, or set of instructions, ensuring alignment with organizational goals or brand identity.

- Improved Relevance: They are adept at generating content that aligns closely with a specific audience or purpose.

How Custom GPTs Differ from Standard GPTs:

- Training Data: Standard GPTs are trained on diverse datasets from the internet, making them versatile but less specialized. Custom GPTs are trained on curated, domain-specific datasets.

- Use Case Focus: Standard models are generalists, while Custom GPTs are experts in their field.

- Customization: Businesses or individuals can tailor Custom GPTs to perform specific actions, generate certain outputs, or align with particular goals.

Applications of Custom GPTs:

Custom GPTs excel in fields where tailored outputs are essential, such as:

- Writing personalized customer service scripts.

- Generating industry-specific reports.

- Automating repetitive tasks while ensuring precision.

Comparison of Standard and Custom GPTs

| Feature | Standard GPT | Custom GPT |

|---|---|---|

| Training Data | General internet data | Specific domain data |

| Specialization | Broad and general | Focused on a niche |

| Use Cases | Versatile | Tailored to specific needs |

| Accuracy in Context | Moderate | High |

| Customization Options | Limited | Extensive |

By understanding the limits for Custom GPTs, such as their dependence on high-quality domain data and the need for regular updates, users can maximize their effectiveness while ensuring they remain relevant and aligned with specific goals.

Key Limitations of Custom GPTs

Token Limits

Token limits define the amount of text a Custom GPT can process in a single interaction. Most GPT models, including Custom GPTs, have a maximum token capacity of about 8,000 tokens per input and output combined.

This limit poses challenges when creating complex or detailed instructions, as exceeding the token count leads to truncation or incomplete processing. Developers often overcome this by splitting data into smaller chunks or using advanced tools like Langchain to manage extended interactions.

File Upload Restrictions

Custom GPTs have strict file upload constraints. Each file cannot exceed 512 MB, and there’s a token limit of 2,000,000 tokens per file. Additionally, individual users typically have a total storage cap of 10 GB, while organizational accounts can store up to 100 GB.

These restrictions can limit handling large datasets or working with many files simultaneously. For instance, you cannot upload more than 20 files per project in certain cases. Overcoming this requires optimizing file sizes or using external storage solutions.

Contextual Memory Constraints

Custom GPTs face challenges in retaining context across interactions. While the models perform well in short-term memory tasks, their ability to recall earlier conversation points diminishes with longer exchanges.

To manage this, developers optimize prompt design or leverage vector databases to maintain context continuity over extended sessions. This ensures the responses remain accurate and relevant, despite inherent memory limits.

Key Limitations of Custom GPTs

| Limitation | Details | Impact | Solutions |

|---|---|---|---|

| Token Limits | Maximum ~8,000 tokens per interaction. | Difficult to process long instructions/data. | Use tools like Langchain for splitting. |

| File Upload Restrictions | Max file size: 512 MB; Max tokens/file: 2M; Total storage: 10 GB (users), 100 GB (orgs). | Limits working with large datasets/files. | Compress files or use external storage. |

| Contextual Memory Limits | Limited memory retention over extended interactions. | Loss of coherence in long conversations. | Use vector databases for context. |

By understanding and working around the limit for Custom GPTs, developers can better tailor their AI tools to specific use cases while managing constraints effectively.

Technical and Operational Challenges of Custom GPTs

Custom GPTs are powerful tools, but they come with technical and operational challenges that can impact their usability. Let’s break these down in simple terms to understand the key issues.

3.1 Performance Issues

Custom GPTs can face limitations in handling complex tasks or extended conversations. As the input size or dialogue length increases, the performance may slow down, causing delays.

These systems are optimized for specific tasks but may struggle with processing heavy workloads or intricate queries. For instance, if a Custom GPT is used for extensive real-time data analysis, it may require significant computational resources, leading to inefficiencies.

Optimization Tip: To overcome this, businesses often streamline queries and prioritize essential tasks to make the system more efficient.

3.2 Security and Privacy

One critical challenge is ensuring the security of data uploaded to Custom GPTs. While robust measures like data encryption and secure cloud storage (e.g., AWS) are commonly used, there is still a potential risk of data misuse or exposure, particularly if the system lacks proper safeguards.

For instance, sharing sensitive information without encryption could compromise confidentiality.

Custom GPT platforms typically segregate user data to prevent overlap, and many adhere to policies where data is not used for training public AI models. However, users must actively manage settings to delete unnecessary files after processing and ensure compliance with privacy regulations.

Best Practices:

- Use encrypted data uploads and secure connections.

- Regularly delete processed files to minimize storage risks.

- Confirm that the platform complies with industry-standard certifications like SOC 2 Type 2.

Summary of Challenges

| Challenge | Details | Suggested Mitigation |

|---|---|---|

| Performance Issues | Slower processing with complex or large-scale queries. | Optimize tasks; prioritize simpler, smaller queries. |

| Security Risks | Potential data exposure during or after uploads. | Encrypt data; delete files post-processing; follow secure storage rules. |

| Privacy Concerns | Risks of data misuse if improperly managed. | Ensure strict privacy settings; use platforms with strong certifications. |

By addressing these challenges, users can better manage the limit for Custom GPTs and unlock their full potential effectively. Always prioritize security and tailor the system to meet your specific operational needs for optimal performance.

Practical Strategies for Managing Custom GPT Limits

Effectively managing the limit for custom GPTs is crucial for optimizing performance and achieving the desired outcomes. This section explores strategies to tackle token limitations, file size constraints, and data integration challenges while enhancing capabilities with APIs.

1. Optimize Token Usage

Custom GPTs have a token limit, typically around 8,000 tokens, which includes both input and output. Managing this limit effectively involves:

- Concise Inputs: Keep prompts and instructions brief and focused. Eliminate unnecessary details while retaining clarity.

- Chunking Information: For lengthy texts, split data into smaller, manageable chunks and process them sequentially.

- Rephrasing for Efficiency: Simplify wording to reduce token consumption while maintaining meaning.

Example: If analyzing a document, summarize key points into bullet forms before inputting them.

2. Handle Large Datasets Within File Size Constraints

Custom GPTs impose file size restrictions, such as 512 MB per file or a total of 10 GB per user. To manage large datasets:

- Preprocessing Data: Compress large files or remove redundant data before uploading.

- Segmenting Data: Divide extensive datasets into smaller portions for incremental processing.

- External Storage Tools: Use cloud storage systems to host datasets, referencing necessary data only when needed.

3. Utilize APIs and External Integrations

APIs can supplement the limitations of custom GPTs by extending functionality:

- Dynamic Integrations: Use APIs to retrieve external, real-time data that exceeds GPT’s memory or knowledge base.

- Custom Workflows: Develop workflows where custom GPTs handle specific tasks, and APIs support additional computations or storage.

- Scaling Capabilities: Combine APIs with other tools, like databases or third-party applications, to bypass token or size constraints.

Summary Table of Strategies

| Challenge | Strategy | Tools/Methods |

|---|---|---|

| Token Limit | Use concise instructions, chunk information | Preprocessing, Summarization |

| File Size Constraints | Compress or segment data | Data Cleaning, Incremental Uploads |

| Integration Challenges | Leverage APIs for supplementary tasks | Custom APIs, External Cloud Services |

By applying these strategies, users can better navigate the limit for custom GPTs and maximize the potential of these powerful tools while addressing operational challenges.

Real-world applications and Limit Management of Custom GPTs

Custom GPTs have been widely adopted across industries, showcasing their versatility and ability to address specific needs. However, effectively managing the limit for custom GPTs is crucial to ensure their successful application in real-world scenarios.

Case Studies: Success Stories of Custom GPTs

- Gaming Industry: Custom GPTs are used to create dynamic and immersive experiences in games. For instance, they generate engaging narratives, characters, and realistic dialogues. Games like No Man’s Sky use AI-driven systems to build vast, procedurally generated universes, enhancing gameplay without manual scripting.

- Customer Support: Businesses leverage custom GPTs to enhance customer service by generating accurate, human-like responses. For example, companies like Keeper Tax and Viable have implemented AI to improve tax filing accuracy and analyze customer feedback effectively. These applications optimize operations while staying within token and memory limits.

- Healthcare Applications: In the medical field, custom GPTs analyze patient data, generate detailed diagnostic reports, and support decision-making. Their ability to process and synthesize information helps in creating actionable insights, despite token constraints.

- Content Creation: Platforms like Canva use custom GPTs for text-based tasks such as generating personalized marketing content. This customization ensures relevance and engagement for end-users while adhering to model-specific operational constraints.

Strategies for Managing Limits in Applications

- Token Optimization: By designing prompts carefully, developers ensure that essential instructions fit within token limits, enabling smooth and effective communication with the AI.

- Integration with External Systems: Combining custom GPTs with APIs or cloud storage services helps manage larger datasets and extend their functionality beyond intrinsic limits.

- Task Specialization: Defining specific use cases—such as customer support or content generation—reduces unnecessary complexity, keeping resource usage within model constraints.

Real-World Applications and Limit Management

| Application | Challenge | Solution |

|---|---|---|

| Gaming | Generating large worlds or dialogues | Procedural generation with optimized token use |

| Customer Support | Handling complex customer inquiries | Context-focused prompts and external data integration |

| Healthcare | Processing large datasets | Dividing tasks into manageable chunks with API extensions |

| Content Creation | Generating diverse and personalized outputs | Tailored prompt designs for each campaign or context |

These examples highlight how limits for custom GPTs can be managed effectively to ensure their broad usability across diverse sectors.

Future Prospects and Enhancements for Custom GPTs

The future of Custom GPTs is incredibly promising, with advancements aiming to address current limitations and enhance their utility across diverse applications. Here’s what the future might hold for these advanced AI systems:

Potential Advancements

- Increased Token and Memory Capacity

Future iterations of GPT models may significantly increase the token limits and memory capacities, allowing them to handle more complex tasks and longer interactions seamlessly. This would make them more capable of processing intricate datasets and extended dialogues effectively. - Improved User Interactions

Enhancements in natural language understanding and processing can make Custom GPTs more intuitive. Features like adaptive learning and multimodal capabilities (integrating text, images, or videos) are expected to improve user engagement and satisfaction. - Enhanced Customization

Developers are working on providing deeper customization options, such as integrating APIs, plugins, and external databases. This flexibility will allow users to fine-tune their GPTs for specific industries or functions. - Integration of Specialized Skills

Custom GPTs may evolve to perform specialized tasks by integrating multiple AI systems. For example, a single GPT could coordinate with other AI tools to manage scheduling, data analysis, and customer interactions simultaneously. - Improved Accessibility

OpenAI plans to make these systems more accessible and affordable to developers and small businesses, promoting widespread adoption and innovation.

Speculations on Future Evolution

- AI Ecosystems: Custom GPTs could become part of broader AI ecosystems where multiple GPTs collaborate to provide comprehensive solutions. This would expand their utility across sectors like healthcare, finance, and education.

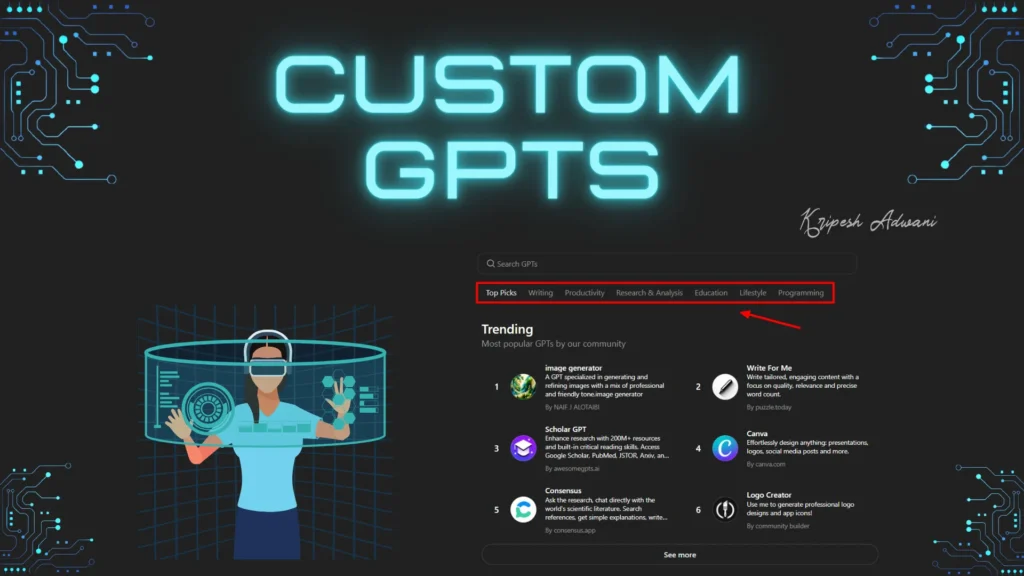

- Monetization Opportunities: With marketplaces like the GPT Store, creators can share and monetize their custom models, fostering innovation and community-driven development.

- Focus on Privacy and Ethics: Enhanced privacy measures and ethical guidelines are expected to build user trust and ensure secure handling of sensitive data.

Key Prospects for Custom GPTs

| Area | Future Prospects |

|---|---|

| Token Limits | Expanded capacity for complex and long-form interactions. |

| Customization | Deeper integrations with APIs and external systems. |

| User Interfaces | More intuitive and user-friendly designs. |

| Collaboration | AI ecosystems for multi-task solutions. |

| Privacy | Stronger safeguards and ethical AI practices. |

By overcoming the current limits for Custom GPTs, these advancements will unlock their full potential, ensuring they remain central to the ongoing evolution of AI systems.

The journey ahead promises greater efficiency, innovation, and inclusivity for businesses and individuals alike.

CustomGPT.ai Limits – Document Uploads, Query Limits, and Prompt Lengths Explained

Choosing the Right CustomGPT.ai Plan: Features, Benefits, and Optimization Tips

Selecting the right CustomGPT.ai plan is crucial for maximizing the efficiency and scalability of your AI-powered chatbot. Whether you’re a small startup, a growing business, or an established enterprise, understanding the features and limitations of each pricing plan can help you make an informed decision. This guide explores query limits, document upload capacities, and the factors that impact your chatbot’s performance.

Understanding CustomGPT.ai Query Limits

Query limits determine the number of interactions your chatbot can handle each month. Each plan is tailored to specific needs, ensuring you don’t exceed your allocated queries:

- Basic Plan: Up to 500 queries per month—ideal for low-traffic applications and testing.

- Standard Plan: Up to 1,000 queries per month—suitable for growing businesses.

- Premium Plan: Up to 5,000 queries per month—designed for high-volume e-commerce and service platforms.

- Enterprise Plan: Customizable query limits to meet your unique requirements.

Query limits are particularly important for businesses expecting frequent user interactions. Exceeding your allowance can interrupt service or force an unexpected upgrade, potentially disrupting operations.

Document Upload Limits: How Much Data Can You Handle?

Your chatbot’s ability to provide accurate and comprehensive responses depends on the amount of content it can process. CustomGPT.ai offers varying upload capacities:

- Basic Plan: Supports up to 3 chatbots, each handling 1,000 pages and 30 million words of content.

- Standard Plan: Allows up to 10 chatbots, each managing 5,000 pages and 60 million words.

- Premium Plan: Supports 100 chatbots, each with a massive 20,000 pages and 300 million words.

- Enterprise Plan: Fully customizable limits to align with your business needs.

If your application relies on extensive datasets, choose a plan that ensures ample upload capacity to avoid limitations that might reduce chatbot accuracy.

Prompt Length and Efficiency

Prompt length refers to the input size provided during a single interaction. While no specific prompt size limits are outlined in CustomGPT.ai plans, keeping prompts concise and clear enhances processing efficiency. Overly lengthy inputs may be truncated, impacting response quality and speed.

Plan-Specific Use Cases

Each CustomGPT.ai plan is designed to cater to different business needs. Below, we outline which plan may best suit your requirements:

Basic Plan: A Cost-Effective Entry Point

The Basic Plan is perfect for:

- Small Businesses and Startups: Ideal for those new to AI chatbots or with limited user interactions.

- Niche Websites and Blogs: Great for answering FAQs or providing basic customer support.

- Prototyping: Suitable for testing and developing chatbot functionalities.

Standard Plan: Growing with Your Business

This plan caters to:

- Expanding Businesses: Accommodates increasing customer interaction volumes.

- Multi-Product Companies: Manage multiple chatbots across diverse services.

- Content-Rich Platforms: Handle significant data to deliver accurate and contextual responses.

Premium Plan: For High-Volume Operations

The Premium Plan is designed for:

- E-Commerce Platforms: Addressing daily customer queries with high precision.

- Content-Heavy Enterprises: Suitable for educational institutions or media outlets managing extensive content libraries.

- Service Providers: Ideal for complex services requiring detailed chatbot interactions.

Enterprise Plan: Fully Customizable Solutions

For large organizations with advanced needs, the Enterprise Plan offers:

- Custom Solutions: Includes bespoke integrations and white-labeling options.

- High-Traffic Applications: Scales to handle massive volumes of user interactions.

- Secure Integrations: Provides advanced security features and seamless IT system integrations.

Why These Factors Matter for Your Business

Choosing the right plan is about more than cost management; it ensures your chatbot can scale effectively with your business:

- Manage Growth: If your business is expanding, choose a plan that supports increasing data and query volumes.

- Avoid Downtime: Prevent service disruptions by selecting a plan that meets your interaction demands.

- Boost User Experience: Ensure fast, accurate responses by leveraging sufficient data upload and query capacities.

Optimize Your Investment in AI

To maximize ROI, consider the following tips:

- Analyze Interaction Volume: Estimate monthly queries to select a plan with adequate limits.

- Assess Content Requirements: Ensure your upload capacity matches the scope of your chatbot’s database.

- Plan for Growth: Anticipate future needs and choose a scalable plan to avoid bottlenecks.

Conclusion: Understanding the Limit for Custom GPTs

Custom GPTs open up exciting opportunities to create personalized AI tools, but they have inherent limitations that require careful management.

These restrictions—such as token and file size limits, memory constraints, and operational challenges—highlight the importance of thoughtful design and optimization when using them.

Despite these constraints, Custom GPTs have proven to be highly versatile and impactful across industries such as customer service, education, and gaming.

By leveraging creative solutions like integrating external tools or refining datasets, users can push these systems closer to their full potential.

As advancements in AI continue, future updates are likely to address some of these challenges, making Custom GPTs even more user-friendly and powerful.

For now, embracing the current limit for Custom GPTs means finding innovative ways to work within these boundaries. This adaptability ensures that users can unlock the most value while preparing for even more advanced applications in the future.

| Key Takeaway | Description |

|---|---|

| Limits Exist | Token, memory, and file constraints impact capabilities. |

| Optimization is Key | Techniques like refining datasets and prompts are crucial. |

| Creative Use Recommended | Leveraging APIs and integrations can bypass some limits. |

| Future Improvements Likely | AI advancements will reduce constraints over time. |

By understanding and managing the limit for Custom GPTs, users can maximize their benefits while staying prepared for the evolving landscape of AI technology.

Call-to-Action: Share Your Journey with Custom GPTs

Creating and using Custom GPTs opens a world of possibilities, but it also presents unique challenges. As we’ve explored the limit for custom GPTs, understanding these boundaries helps us innovate within them. We now invite you to share your experiences.

Have you encountered difficulties while setting up your Custom GPT? Did you find creative solutions to manage file size or token constraints? Your stories could inspire others to navigate the same path.

What You Can Do Next:

- Share Your Story: Leave a comment or connect on forums where AI enthusiasts and developers discuss solutions to challenges like token limits and memory constraints.

- Explore Resources: Dive into tutorials, community discussions, and open-source tools that help manage the limit for custom GPTs effectively.

- Collaborate with Experts: Engage with professionals in the field to refine your strategies or integrate advanced features like APIs to enhance functionality.

Where to Learn More

- Visit OpenAI’s official documentation to explore Custom GPTs’ functionalities.

- Check out resources like the OpenAI Developer Forum and user guides for practical advice on overcoming the limit for custom GPTs.

Your insights matter. Join the conversation and empower the Custom GPT community with your experiences!

| Action | Details |

|---|---|

| Join Community Discussions | Participate in forums and share tips on managing the limit for custom GPTs. |

| Explore Additional Resources | Access guides and tools to optimize GPT usage. |

| Collaborate for Innovation | Connect with experts to improve your GPT setup. |

This journey is all about learning and sharing. Let’s make the most of the incredible potential Custom GPTs offer!